In today’s fast-paced world of digital marketing, staying ahead of the competition is paramount. One way to gain a competitive edge is through the strategic use of A/B testing.

This is a powerful tool for marketers, product developers and designers to optimise their digital efforts by testing different variations and identifying what resonates most with their audience. Imagine you’re running an e-commerce website, and you want to optimise your product page to maximise conversions. By conducting an A/B test, you can compare two versions of a web page or email with a single element variation, such as the placement of a button or the color of a headline.

This simple test can provide insights into what drives users to convert and enable data-driven decision-making to improve your bottom line.

It’s important to note that A/B testing should be conducted with a clear hypothesis and a significant sample size to ensure statistical significance. Without a clear hypothesis, you may end up testing things that are not important or relevant to your audience. And without a significant sample size, you may not get reliable results that are statistically significant.

Enhancing A/B Test Results

Test One Variable at a Time: To obtain accurate and actionable insights, it is essential to test a single variable at a time. By focusing on one element, such as headline copy, button design, or page layout, you can isolate the impact of that specific change on user behaviour. This approach allows for a clear understanding of what resonates with your audience and leads to desired outcomes.

Establish a Clear Objective: Before diving into an A/B test, define a clear objective. Are you looking to increase click-through rates, reduce bounce rates, or boost overall conversion rates? A well-defined objective provides direction and ensures that your test aligns with your business goals. It also allows you to evaluate the success of your experiment against specific key performance indicators (KPIs).

Utilise a Substantial Sample Size: To make confident decisions based on your A/B test results, ensure that you have a statistically significant sample size. Having a considerable amount of traffic flow is paramount since it guarantees a substantial sample size to cater to the diverse experiments and tests. A website with high traffic can facilitate split tests in various segments while retaining the validity and reliability of the results.

Testing on a small sample may lead to unreliable or inconclusive results. By utilising a large enough sample, you can gain confidence in the data and draw more accurate conclusions about the impact of the tested element.

To fully make use of traffic for split testing, it is necessary to have an in-depth comprehension of the essence of traffic segmentation. This involves the categorisation of traffic into distinct groups based on certain parameters such as the source of the traffic, behavior, and demographics. The identification of specific segments that exhibit promising potential can then take center stage in the split testing. Furthering, a focus on high-impact pages with notable traffic guarantees a better understanding of user behaviour and interactions, which subsequently spawns viable hypotheses for split testing.

Segment Your Audience: Not all customers are created equal, and their preferences and behaviours can vary. By segmenting your audience, you can conduct A/B tests on specific groups to understand how different elements resonate with each segment. This allows you to personalise your marketing efforts and optimise the user experience for various customer segments.

Prioritising A/B Test Hypotheses

It’s crucial to start with a well-articulated hypothesis that identifies the variable you believe might influence your website performance. While choosing a variable, initially conducted user research can act as a stepping stone. Further, rely upon quantitative data from analytics to find potential areas for improvement. Subsequently, qualitative data such as user feedback, evaluations, and insights from user tests can help specify concrete variables. Combining these insights provides a holistic view of customer preferences and helps identify areas with the most significant potential for improvement.

Analyse also, historical data to identify patterns and pain points. Look for opportunities to optimise areas that have the most substantial impact on your KPIs. By basing your decisions on data, you can prioritise hypotheses that are likely to generate the most significant positive results.

Achieving Statistical Significance in Split Testing

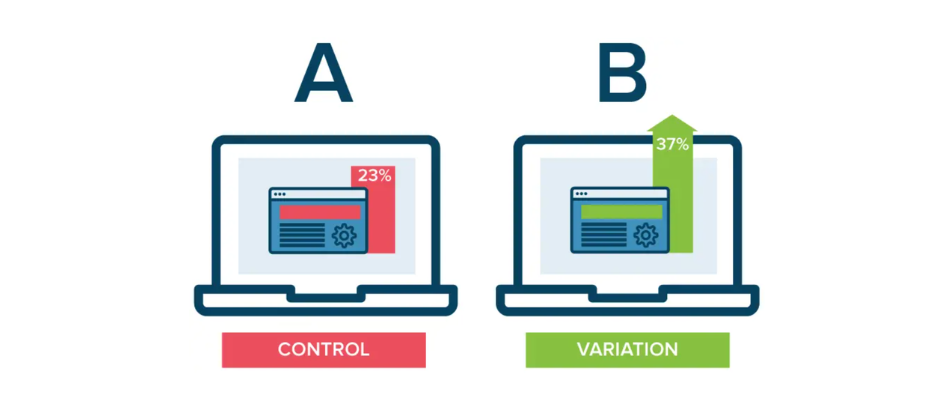

Statistical significance plays a pivotal role in the realm of split testing. It fundamentally guides the insightful interpretation of the results procured from experiments, thereby confirming whether the variations, whether it’s the design, buttons used, or lines of sales copy, actually resulted in improved performance. This significance aids in making data-driven decisions, ensuring that the enhanced user experience and progress are not the mere consequence of chance or random fluctuations, but a fundamental byproduct of well-calculated modifications.

In essence, achieving statistical significance commences with setting up a clear hypothesis, followed by a robust split-testing design. Critical key performance indicators (KPIs) are then identified and tracked meticulously to capture useful data. The data procured is then subjected to statistical analysis, often relying crucially on the t-test, to determine whether the observed performance difference between the control and the variant is substantial or not. It should be noted that adoption of a larger sample size in split test corresponds to more reliable results, as it considerably reduces the risk of false positives. When the mathematical probability of achieving the observed, or even better results, is considerably low assuming the null hypothesis, we can conclusively ascertain that the given variant has significantly outperformed the control group. This, in return, enables one to confidently decide on the variation to be implemented for future visitors, driving the objective of Conversion Rate Optimisation.

Analysing A/B Test Results

Data analysis stands as a critical last leg in the journey of split testing. This process involves the comprehension, interpretation, and application of the information garnered from your experiments. This voluminous data, ranging from web traffic metrics to user interactions, serves to validate the effectiveness of the variables applied and provide insights on user behaviour and preference.

When analysing A/B test results, it’s important to take a holistic view of the results and not just focus on a single metric. By comparing the results, you can identify which version outperformed the other. For example, if one variation has a higher conversion rate but a higher bounce rate, it may not be the best option overall. You may want to consider a variation that has a lower conversion rate but a higher engagement rate or longer average time on site, as these metrics can also contribute to your overall business goals.

Another important consideration when analysing A/B test results is segmenting your audience. By segmenting your audience, you can see how different variations perform with different groups of users. For example, you may find that a certain variation performs better with mobile users than desktop users, or that it resonates more with a specific age group or geographic region. By understanding these differences, you can tailor your marketing efforts and user experience to better meet the needs of each segment.

Finally, it’s important to continue testing and iterating based on your results. A/B testing is an ongoing process, and there is always room for improvement. Use your results to inform your next test hypotheses, and continue refining and optimising your website, marketing campaigns, and user experience. With a data-driven approach, you can make informed decisions that will help you achieve your business goals and stay ahead of the competition.

Common Mistakes to Avoid when Conducting Split Tests

Conducting split tests to enhance website performance unarguably plays a vital role in Conversion Rate Optimisation. However, the process is not without potential pitfalls. Missteps in the execution of these tests can undermine the integrity of the data collected, negatively impacting the overall effectiveness of a digital marketing strategy.

Commencing with the most prevalent mistake, many digital marketers conduct split tests without a clear hypothesis in place. A well-defined hypothesis forms the basis for any successful split-testing experiment since it outlines what the experiment aims to prove or disprove. Another common error falls in the realm of sample size. Marketers often miscalculate the amount of traffic necessary to achieve statistical significance, leading to unreliable results. Inadequate testing duration also contributes to inaccurate insights. Testing for too short a duration may not capture fluctuations in user behaviour, while testing for too long can unnecessarily tie up resources, minimising the capacity to implement other beneficial tests.

Making the Most of Your Successful Split Tests

Successful split tests can be interpreted as gold mines of insight, brimming with valuable data that can be leveraged to fortify your digital marketing strategies. They represent the culmination of a carefully orchestrated process that balances customer insight, creative execution, and strategic testing. Each success does not merely indicate a winning variable; it unveils a piece of the puzzle that can be exploited for maximum conversions.

The exploitation process begins with a comprehensive analysis of the successful test data, which should focus on understanding why the winning variable outperformed its counterparts. This could involve mapping out the customer journey to see how the experiment’s changes influenced behaviour, or conducting follow-up surveys to obtain qualitative information. Such a detailed exploration can yield a profound understanding of the customer’s needs and preferences, which can then be used to refine ongoing and future experiments. From this process, a cycle emerges—success leads to insight, which triggers improvement, and paves the way for additional successful split tests. It’s a self-sustaining loop of continuous learning and enhancement that is at the heart of effective digital marketing.

Lessons to Learn from Failed Split Tests

Split tests are integral to enhancing digital experiences. However, they may not always yield triumphant outcomes. These circumstances of seeming defeat should not be perceived as utter setbacks. Rather, they should be embraced as fertile opportunities for learning and growth. Delving into the nuances of tests that did not pan out as planned can reveal essential insights on aspects that must be improved. Examination of these instances can shed light on the variables to be tweaked for optimising conversion rates.

One of the primary lessons to be learnt from failed split tests is the importance of establishing a precise and coherent hypothesis. Occasionally, split tests fail because the foundational hypothesis is flawed or not comprehensive enough. As such, these failures highlight the necessity of laying down a robust premise before proceeding towards the actual test. Another key lesson emanates from the realisation that enhancing conversion rates is not simply about adding features to a site or an app. Sustainable conversion rates are often attained by working on different aspects such as the unique selling proposition, the clarity of the message and simplifying the navigation. Ignoring these and focusing only on cosmetic changes may result in unsuccessful tests, thereby signalling the need to strategise differently.

Convert Experiences

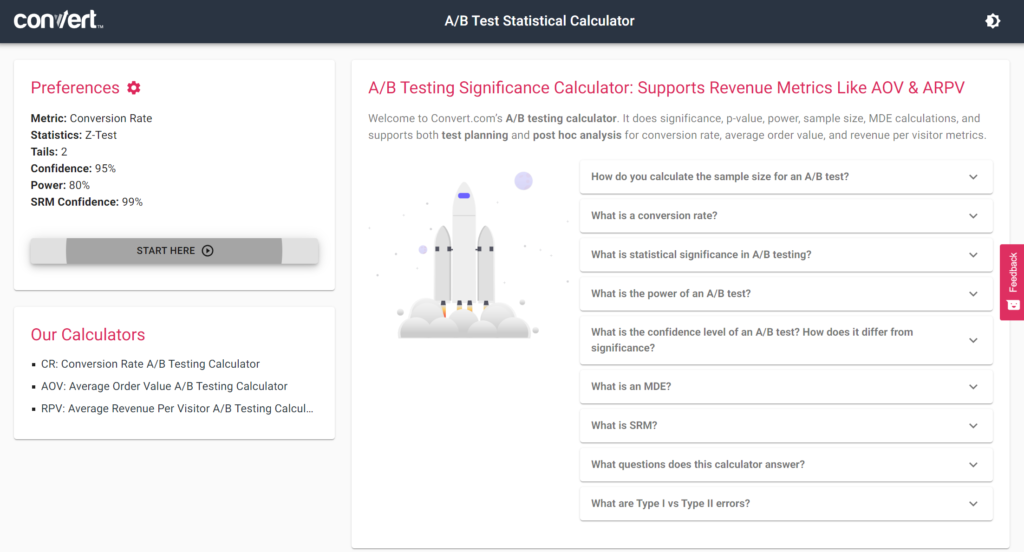

The calculator by Convert helps plan your testing strategy – should you go for the big swings, or can you have a mapped-out, iterative testing game plan?

It lets you:

- Understand sample size requirements (how many visitors are needed to detect a particular effect, for specific power and confidence-risk – settings).

- Gauge testing cycles, given the sample size requirement.

- Consciously accept higher levels of risk (lower confidence) for tests where the impact of a false positive isn’t profoundly damaging to the business.

- Tweak power settings with MDEs (minimum detectable effect) to define your testing strategy.

A/B testing is a game-changer for digital marketers, enabling them to optimise their strategies and drive better results. With the right approach, A/B testing can be a powerful tool for optimising your digital marketing efforts. By testing different variations and identifying what resonates most with your audience, you can make informed design decisions that will improve user engagement, increase conversions, and ultimately grow your business.